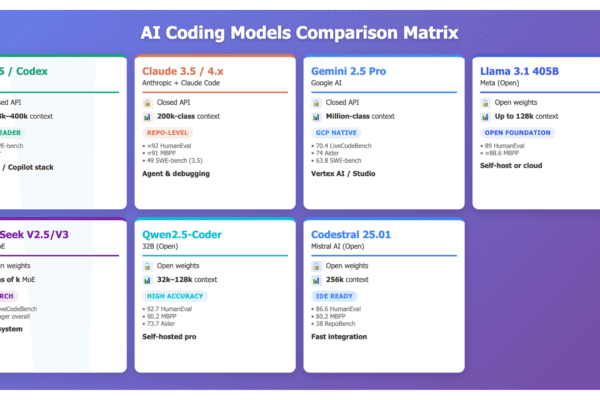

Comparing the Top 7 Large Language Models LLMs/Systems for Coding in 2025

Code-oriented large language models moved from autocomplete to software engineering systems. In 2025, leading models must fix real GitHub issues, refactor multi-repo backends, write tests, and run as agents over long context windows. The main question for teams is not “can it code” but which model fits which constraints. Here are seven models (and systems…