AI Interview Series #1: Explain Some LLM Text Generation Strategies Used in LLMs

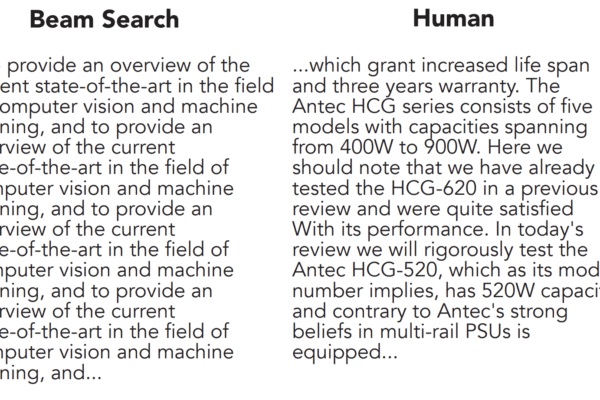

Every time you prompt an LLM, it doesn’t generate a complete answer all at once — it builds the response one word (or token) at a time. At each step, the model predicts the probability of what the next token could be based on everything written so far. But knowing probabilities alone isn’t enough —…