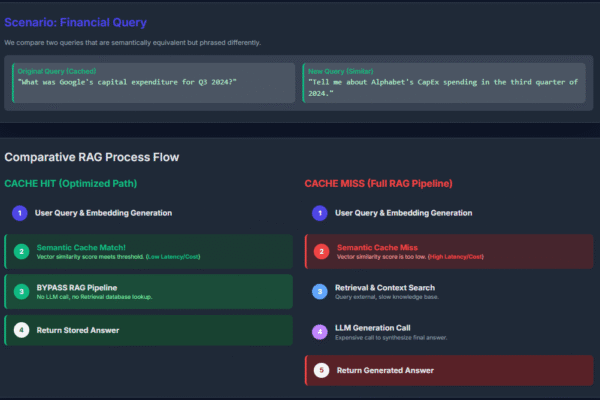

How to Reduce Cost and Latency of Your RAG Application Using Semantic LLM Caching

Semantic caching in LLM (Large Language Model) applications optimizes performance by storing and reusing responses based on semantic similarity rather than exact text matches. When a new query arrives, it’s converted into an embedding and compared with cached ones using similarity search. If a close match is found (above a similarity threshold), the cached response…