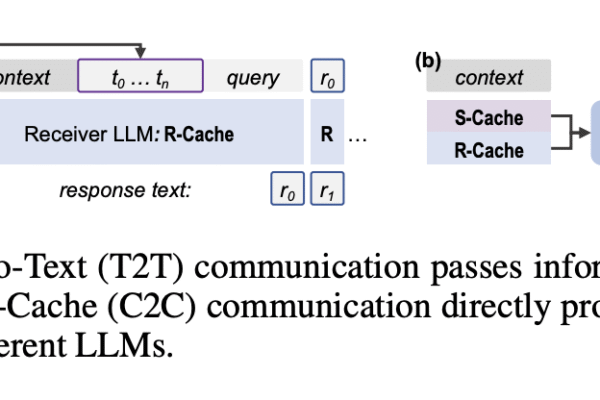

Cache-to-Cache(C2C): Direct Semantic Communication Between Large Language Models via KV-Cache Fusion

Can large language models collaborate without sending a single token of text? a team of researchers from Tsinghua University, Infinigence AI, The Chinese University of Hong Kong, Shanghai AI Laboratory, and Shanghai Jiao Tong University say yes. Cache-to-Cache (C2C) is a new communication paradigm where large language models exchange information through their KV-Cache rather than…