How to Reduce Cost and Latency of Your RAG Application Using Semantic LLM Caching

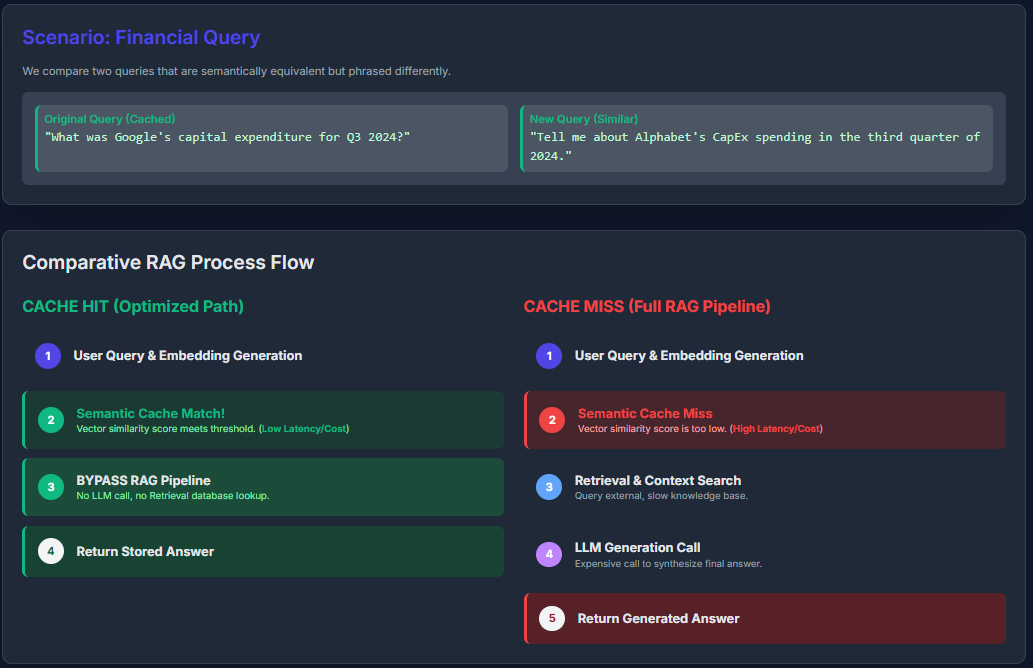

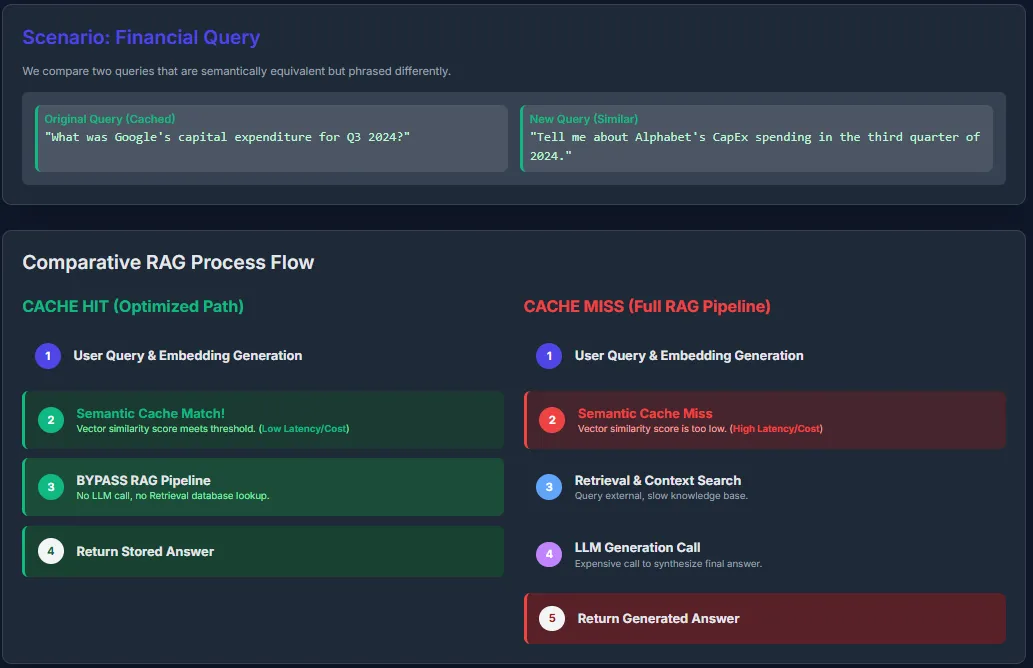

Semantic caching in LLM (Large Language Model) applications optimizes performance by storing and reusing responses based on semantic similarity rather than exact text matches. When a new query arrives, it’s converted into an embedding and compared with cached ones using similarity search. If a close match is found (above a similarity threshold), the cached response is returned instantly—skipping the expensive retrieval and generation process. Otherwise, the full RAG pipeline runs, and the new query-response pair is added to the cache for future use.

In a RAG setup, semantic caching typically saves responses only for questions that have actually been asked, not every possible query. This helps reduce latency and API costs for repeated or slightly reworded questions. In this article, we’ll take a look at a short example demonstrating how caching can significantly lower both cost and response time in LLM-based applications. Check out the FULL CODES here.

Semantic caching functions by storing and retrieving responses based on the meaning of user queries rather than their exact wording. Each incoming query is converted into a vector embedding that represents its semantic content. The system then performs a similarity search—often using Approximate Nearest Neighbor (ANN) techniques—to compare this embedding with those already stored in the cache.

If a sufficiently similar query-response pair exists (i.e., its similarity score exceeds a defined threshold), the cached response is returned immediately, bypassing expensive retrieval or generation steps. Otherwise, the full RAG pipeline executes, retrieving documents and generating a new answer, which is then stored in the cache for future use. Check out the FULL CODES here.

In a RAG application, semantic caching only stores responses for queries that have actually been processed by the system—there’s no pre-caching of all possible questions. Each query that reaches the LLM and produces an answer can create a cache entry containing the query’s embedding and corresponding response.

Depending on the system’s design, the cache may store just the final LLM outputs, the retrieved documents, or both. To maintain efficiency, cache entries are managed through policies like time-to-live (TTL) expiration or Least Recently Used (LRU) eviction, ensuring that only recent or frequently accessed queries remain in memory over time. Check out the FULL CODES here.

Installing dependencies

Setting up the dependencies

import os

from getpass import getpass

os.environ['OPENAI_API_KEY'] = getpass('Enter OpenAI API Key: ')For this tutorial, we will be using OpenAI, but you can use any LLM provider.

from openai import OpenAI

client = OpenAI()Running Repeated Queries Without Caching

In this section, we run the same query 10 times directly through the GPT-4.1 model to observe how long it takes when no caching mechanism is applied. Each call triggers a full LLM computation and response generation, leading to repetitive processing for identical inputs. Check out the FULL CODES here.

This helps establish a baseline for total time and cost before we implement semantic caching in the next part.

import time

def ask_gpt(query):

start = time.time()

response = client.responses.create(

model="gpt-4.1",

input=query

)

end = time.time()

return response.output[0].content[0].text, end - startquery = "Explain the concept of semantic caching in just 2 lines."

total_time = 0

for i in range(10):

_, duration = ask_gpt(query)

total_time += duration

print(f"Run {i+1} took {duration:.2f} seconds")

print(f"\nTotal time for 10 runs: {total_time:.2f} seconds")

Even though the query remains the same, every call still takes between 1–3 seconds, resulting in a total of ~22 seconds for 10 runs. This inefficiency highlights why semantic caching can be so valuable — it allows us to reuse previous responses for semantically identical queries and save both time and API cost. Check out the FULL CODES here.

Implementing Semantic Caching for Faster Responses

In this section, we enhance the previous setup by introducing semantic caching, which allows our application to reuse responses for semantically similar queries instead of repeatedly calling the GPT-4.1 API.

Here’s how it works: each incoming query is converted into a vector embedding using the text-embedding-3-small model. This embedding captures the semantic meaning of the text. When a new query arrives, we calculate its cosine similarity with embeddings already stored in our cache. If a match is found with a similarity score above the defined threshold (e.g., 0.85), the system instantly returns the cached response — avoiding another API call.

If no sufficiently similar query exists in the cache, the model generates a fresh response, which is then stored along with its embedding for future use. Over time, this approach dramatically reduces both response time and API costs, especially for frequently asked or rephrased queries. Check out the FULL CODES here.

import numpy as np

from numpy.linalg import norm

semantic_cache = []

def get_embedding(text):

emb = client.embeddings.create(model="text-embedding-3-small", input=text)

return np.array(emb.data[0].embedding)

def cosine_similarity(a, b):

return np.dot(a, b) / (norm(a) * norm(b))

def ask_gpt_with_cache(query, threshold=0.85):

query_embedding = get_embedding(query)

# Check similarity with existing cache

for cached_query, cached_emb, cached_resp in semantic_cache:

sim = cosine_similarity(query_embedding, cached_emb)

if sim > threshold:

print(f"🔁 Using cached response (similarity: {sim:.2f})")

return cached_resp, 0.0 # no API time

# Otherwise, call GPT

start = time.time()

response = client.responses.create(

model="gpt-4.1",

input=query

)

end = time.time()

text = response.output[0].content[0].text

# Store in cache

semantic_cache.append((query, query_embedding, text))

return text, end - startqueries = [

"Explain semantic caching in simple terms.",

"What is semantic caching and how does it work?",

"How does caching work in LLMs?",

"Tell me about semantic caching for LLMs.",

"Explain semantic caching simply.",

]

total_time = 0

for q in queries:

resp, t = ask_gpt_with_cache(q)

total_time += t

print(f"⏱️ Query took {t:.2f} seconds\n")

print(f"\nTotal time with caching: {total_time:.2f} seconds")

In the output, the first query took around 8 seconds as there was no cache and the model had to generate a fresh response. When a similar question was asked next, the system identified a high semantic similarity (0.86) and instantly reused the cached answer, saving time. Some queries, like “How does caching work in LLMs?” and “Tell me about semantic caching for LLMs,” were sufficiently different, so the model generated new responses, each taking over 10 seconds. The final query was nearly identical to the first one (similarity 0.97) and was served from cache instantly.

Check out the FULL CODES here. Feel free to check out our GitHub Page for Tutorials, Codes and Notebooks. Also, feel free to follow us on Twitter and don’t forget to join our 100k+ ML SubReddit and Subscribe to our Newsletter. Wait! are you on telegram? now you can join us on telegram as well.